Also, because this runs so fast, we can be doing this hourly instead of daily. You can see that fixed assets instead of insert overwrite we can use merge into. Then just change from the ICDC table, and then add the dedupe logic. Also, you can use insert into if you like, or if there is a dedupe logic or other workflows running, trying to dedupe the data.

Zero Waste, Radical Magic, and Italian Graft – Quarkus Efficiency Secrets

I hope this example demonstrates how simple and powerful it is for users working with Maestro using IPS. Then they have to do this every day, and rewrite the past 14 days of data. This pipeline can take quite a while, so they have to run daily. Then for this table, consumers, we may have hundreds of workflows consumed from this table. They build aggregation pipelines to power their business use cases. Then here in this case, just doing data aggregation, incremental cost also have to consume the data from the past 14 days as well.

- This is important, not only because of efficiency, but also because lots of times, we cannot access user data because of security requirement or the privacy requirement.

- Here, the parameter of my query includes the SQL query trying to do something.

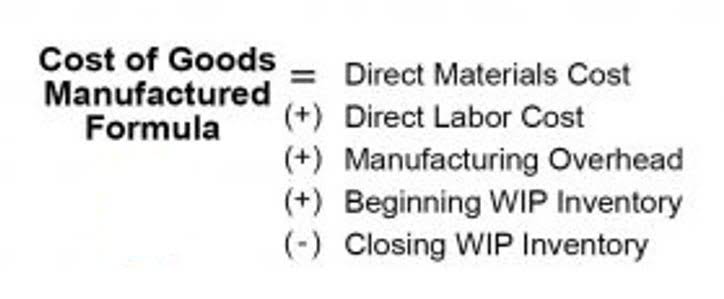

- Incremental costs are the costs linked with the production of one extra unit, and it considers only those costs that tend to change with the outcomes of a particular decision.

- If a reduced price is established for a special order, then it’s critical that the revenue received from the special order at least covers the incremental costs.

- If a reduced price is established for a special order, then it’s critical that the revenue received from the special order at least covers the incremental costs.

- It provides lots of information to help us build a mechanism to be able to catch the change without actually reading the user data at all.

Examples of Incremental Costs

- Another approach is that we can just ignore the late arriving data that sometimes works, especially the business decision we have to make.

- However, none of it will include the fixed costs since they will not change due to volume fluctuation.

- To give you an idea of how knowing your incremental and marginal cost leads to better financial planning, let’s get back to the shirt business example.

- Next, I will use an example to show how this approach works.

- This analysis is also critical for make-or-buy decisions, helping businesses compare the costs of in-house production with outsourcing.

- We have to support a mix of those pipelines as well.

Also, it improved the data freshness as we can now run hourly. In that case, we can read the Iceberg metadata to quickly get the range, like min or max from all the tables for a given column. The mean is P1, and max is P2, and then I can load the table, the partition P1, P2, and the partition P1, P2 during the ETL workflow and then during the processing there.

How is marginal revenue related to the marginal cost of production?

We observe the users in our platform usually follow those two common patterns to deal with late arriving data. In that scenario, the workflow owner or the job owner, usually have a lot of business domain knowledge, so they can tell how long they should look back. If the data is older, then that window likely does not have much business value there, so they can discard. As seen in Case 2, incremental cost increased significantly by $55,000 to produce 5,000 more units of tobacco.

This content is in the AI, ML & Data Engineering topic

- Then in their daily pipeline, they aggregate this table and then save it to target table by changing the partition key from processing to the event time.

- This concept of incremental cost of capital is useful while identifying costs that are to be minimized or controlled and also the level of production that can generate revenue more than return.

- The key is that the event time matters a lot to the business, not the processing time.

- Jun He discusses how to use an IPS to build more reliable, efficient, and scalable data pipelines, unlocking new data processing patterns.

- In that case, users can use whatever language they like or compute engine they like to implement their business logic, and leave the incremental processing to be handled by the platform.

Discover emerging trends, insights, and real-world best practices in software development & tech leadership. A leveraged buyout (LBO) is a transaction in which a company or business is acquired using a significant amount of borrowed money (leverage) to meet the cost of acquisition. Discover the key financial, operational, and strategic traits that make a company an ideal Leveraged Buyout (LBO) candidate in this comprehensive guide. Verified Metrics has achieved SOC 2 Type 1 Certification, underscoring our commitment to data security, transparency, and reliability for our global community of finance professionals. Led by editor-in-chief, Kimberly Zhang, our editorial staff works hard to make each piece of content is to the highest standards. Our rigorous editorial process includes editing for accuracy, recency, and clarity.

Understanding Contribution Margins

We want to process using like a traditional hierarchy, you process all the data. We have to select data from P0, P1, P2 everything, which means that we actually reprocess those data files again. It’s not efficient, thinking about that, if this is like 40 days or something, a huge amount of data. It is a high-performance table format for huge analytics tables. It has now become the top batch project and one of the most popular open table formats. It brings lots of great features, I’ve listed some here.

Allocation of Incremental Costs

You can imagine that that many users develop that amount of workflows in our platform, we cannot break them, or we cannot ask them to make changes significantly. The key is to decouple the change capturing from the user business logic. Then we got freshness, we got cost efficiency, but we lose the data accuracy.

It provides lots of information to help us build a mechanism to be able to catch the change without actually reading the user data at all. Let’s go over some basic Iceberg table concepts first. This catalog can be pluggable like a Hive catalog, or Glue catalog, or JDBC, or REST catalog. At Netflix, we have our own internal catalog service as well. Then the tables will save the metadata file in the metadata file which has a list of manifest files which map to the snapshots.

Benefits to Incremental Cost Analysis

This is very common, especially if you are going to join multiple tables. In that case, the change data from the past time window only tells me the users that recently watched Netflix but it does not give bookkeeping for cleaning business me total time. However, this change data itself, you will just take a look at the change data and select a unique user ID from it. We are going to get a small set so the user ID, those users at least watching Netflix in that window, with that, while we’re doing the processing, we can use that as a filter. We can join the original table on this table by these user IDs that we find, we can quickly prompt the datasets to process the transform to be able to get the sum easily. If they use other engines, they have to rewrite it, use the engine that is supporting incremental processing.

0 comentários